Microbenchmarking Unified Memory in CUDA 6.0

Table of Contents

1 Introduction

CUDA 6.0 introduced Unified Memory, a new feature that makes it easier to write CUDA programs by automating data transfers between the CPU and GPU. A key problem with any automated data transfer solution is that it can introduce redundant transfers. We studied this problem in our PACT 2012 paper and concluded that redundant transfers can only be minimized by maintaining full runtime coherence status on both the CPU and GPU.

In this document, I evaluate CUDA 6.0's Unified Memory (UM) mechanism on a Kepler K20Xm using a number of microbenchmarks derived from our previous work. These microbenchmarks are described in the sections below and the source code is also available for download. Using these microbenchmarks, I find that UM can introduce redundant transfers in common usage patterns.

The microbenchmarks show that:

- UM assumes that the GPU always has non-stale (i.e. "freshest") data. This leads to redundant non-stale to non-stale transfers from the GPU to the CPU.

- UM does not check if the GPU actually needs the data being transferred from the CPU. This leads to potentially redundant eager transfers.

In the style of Table 1 in our paper, UM can be summarized as:

| Scheme | Only Non-stale to Stale | Prevents Eager | ||

|---|---|---|---|---|

| CPU-to-GPU | GPU-to-CPU | CPU-to-GPU | GPU-to-CPU | |

| CUDA 6.0 UM | Y | N | N | Y |

2 Setup

The hardware and software setup used herein is:

GPU: Tesla K20Xm (3.5) Managed memory supported: 1 Driver: 6000 Runtime: 6000

We use the nvprof command line profiler to obtain the following statistics:

- CPU Page Faults

- Bytes transferred from GPU to CPU (DtoH)

- Bytes transferred from CPU to GPU (HtoD)

3 Microbenchmarks

3.1 automem

The automem microbenchmark verifies Unified Memory exists and is working correctly.

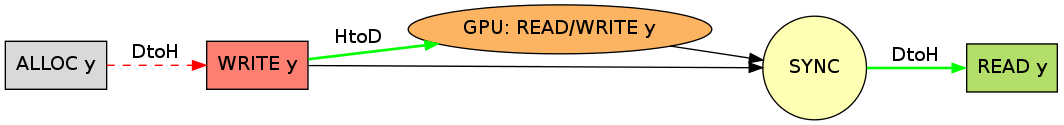

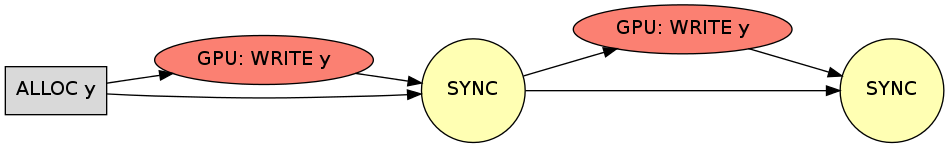

As the graph below shows, it allocates a Unified Memory variable y, initializes it on the CPU, then invokes a kernel that doubles the value of the variable, and finally the CPU prints out the doubled value.

All the square boxes in the graph are CPU actions, while ellipses are GPU actions.

The circular SYNC corresponds to a cudaDeviceSynchronize() call.

The labels on the edges are explained below.

The profiler output for automem is:

| Unified Memory CPU page faults | 2 |

|---|---|

| Unified Memory Memcpy DtoH | 8192 |

| Unified Memory Memcpy HtoD | 4096 |

Each page fault corresponds exactly to a DtoH transfer and are marked in the graph. I assume HtoD transfers only occur on a kernel call. In the graph, the green arrows indicate required transfers while the red dashed arrows indicate redundant transfers.

For automem, it is clear that a page has to be transferred from the CPU to the GPU ("HtoD") after initialization since the CPU has the non-stale copy. It is also clear that a page must to be transferred back from the GPU to the CPU ("DtoH") since the GPU modifies the shared variable. This happens when the CPU attempts to print the value of y after the kernel. However, the DtoH that occurs when y is being initialized is not necessary – neither the GPU nor the CPU have the non-stale copy immediately after the allocation of a Unified Memory variable. This page fault during initialization can only happen if UM assumes that the GPU has a non-stale copy after allocation while the CPU has a stale copy. The nokernel microbenchmark below shows that this is indeed the case.

3.2 nokernel

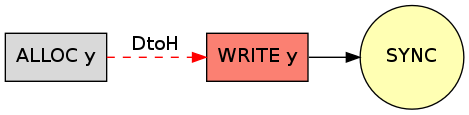

The nokernel microbenchmark allocates a Unified Memory variable and initializes it on the CPU. There are no kernel calls.

The profiler output for nokernel is:

| Unified Memory CPU page faults | 1 |

|---|---|

| Unified Memory Memcpy DtoH | 4096 |

The profiler log shows that nokernel exhibits a page fault and a transfer from the GPU to the CPU. The initial coherence status assigned to the devices is therefore not the same, but the GPU is always assumed to contain the non-stale data initially. Thus, initializing data on the CPU, a very common pattern, will incur redundant data DtoH transfers under UM. Further, as the the next microbenchmark readonly shows, UM always assumes the GPU has a non-stale copy after a kernel call too.

3.3 readonly

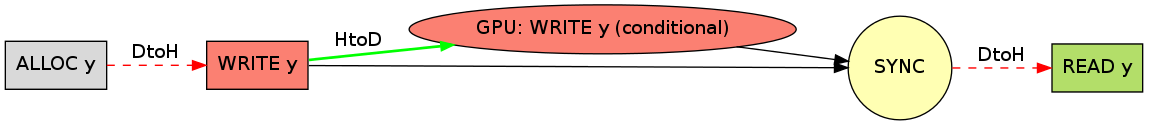

The readonly microbenchmark contains a kernel that writes to a Unified Memory variable conditionally. In the standard run, the kernel does not write to the Unified Memory variable.

The profiler output for readonly is:

| Unified Memory CPU page faults | 2 |

|---|---|

| Unified Memory Memcpy DtoH | 8192 |

| Unified Memory Memcpy HtoD | 4096 |

Since the kernel did not change y, there is no need for a DtoH transfer when the CPU reads y. But as the profiler shows, readonly incurs page faults both during initialization as well as during the read of y. So it seems that in UM, a kernel is always assumed to write to Unified Memory variables. Therefore, Unified Memory variables that are only read by GPU will incur redundant transfers when accessed by the CPU after a kernel call. Further, as the multivars microbenchmark next shows, there seems to be no hardware ability to detect which variables a GPU kernel reads and/or writes.

3.4 multivars

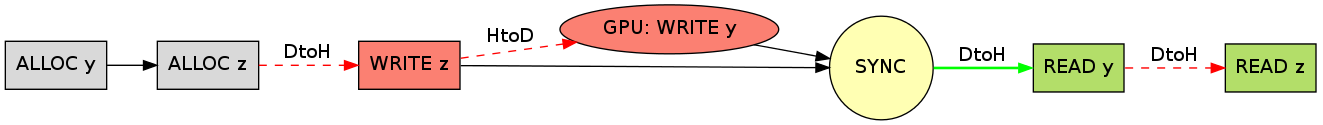

The multivars microbenchmark initializes two Unified Memory variables, y and z, where z is not used by the kernel. The variables y and z are sized to span 1 and 160 CPU pages respectively.

The profiler log for multivars is:

| Unified Memory CPU page faults | 321 |

|---|---|

| Unified Memory Memcpy DtoH | 1314816 |

| Unified Memory Memcpy HtoD | 655360 |

The log demonstrates that UM transfers all CPU-modified shared data, regardless of whether a GPU kernel actually reads that data. Here, the HtoD numbers reflect the redundant eager transfer of z's 160 pages. The DtoH numbers reflect 160 pages of z transferred during initialization and after the kernel call, 160 pages of z + 1 page of y.

Since UM supports recursive data structures (like trees, linked lists, etc.), it cannot examine kernel arguments to limit which pages must be transferred.

3.5 privmem

The privmem microbenchmark exercises an important use-case (GPU private memory) where GPU kernels share some data amongst themselves but the CPU never reads or writes to it. Ideally, we should never see any Unified Memory transfers of such data.

As expected, the profiler does not log any Unified Memory transfers, so UM does the right thing.

4 Download

The source code and supporting scripts for the UM microbenchmarks is available.

5 Conclusions

CUDA 6.0's UM evaluated on a Kepler K20Xm:

- assumes that the GPU always contains the most up-to-date (non-stale) data

- transfers all modified data from the CPU to the GPU regardless of whether it will be read, causing redundant eager transfers

- transfers all data from the GPU to the CPU even if the GPU did not modify the data, causing redundant non-stale to non-stale transfers

Thus, it exhibits redundant transfers.