Chenliang Xu

Audio-Visual Scene Understanding

Graduate Students: Yapeng Tian, Lele Chen, Hao Huang, Wei Zhang, Sefik Emre Eskimez, Bochen Li, Rui Lu, Yujia Yan

Undergraduate Students: Bryce Yahn, Patrick Phillips, Justin Goodman, Marc Moore, Chenxiao Guan

Award Number: NSF IIS 1741472

Award Title: BIGDATA: F: Audio-Visual Scene Understanding

Award Amount: $666,000.00

Duration: 09/01/2017 - 08/31/2021 (Estimated)

Overview of Goals and Challenges:

Understanding scenes around us, i.e., recognizing objects, human actions and events, and inferring their spatial, temporal, correlative and causal relations, is a fundamental capability in human intelligence. Similarly, designing computer algorithms that can understand scenes is a fundamental problem in artificial intelligence. Humans consciously or unconsciously use all five senses (vision, audition, taste, smell, and touch) to understand a scene, as different senses provide complimentary information. For example, watching a movie with the sound muted makes it very difficult to understand the movie; walking on a street with eyes closed without other guidance can be dangerous. Existing machine scene understanding algorithms, however, are designed to rely on just a single modality. Take the two most commonly used senses, vision and audition, as an example, there are scene understanding algorithms designed to deal with each single modality. However, no systematic investigations have been conducted to integrate these two modalities towards more comprehensive audio-visual scene understanding. Designing algorithms that jointly model audio and visual modalities towards a complete audio-visual scene understanding is important, not only because this is how humans understand scenes, but also because it will enable novel applications in many fields. These fields include multimedia (video indexing and scene editing), healthcare (assistive devices for visually and aurally impaired people), surveillance security (comprehensive monitoring of the suspicious activities), and virtual and augmented reality (generation and alternation of visuals and/or sound tracks).

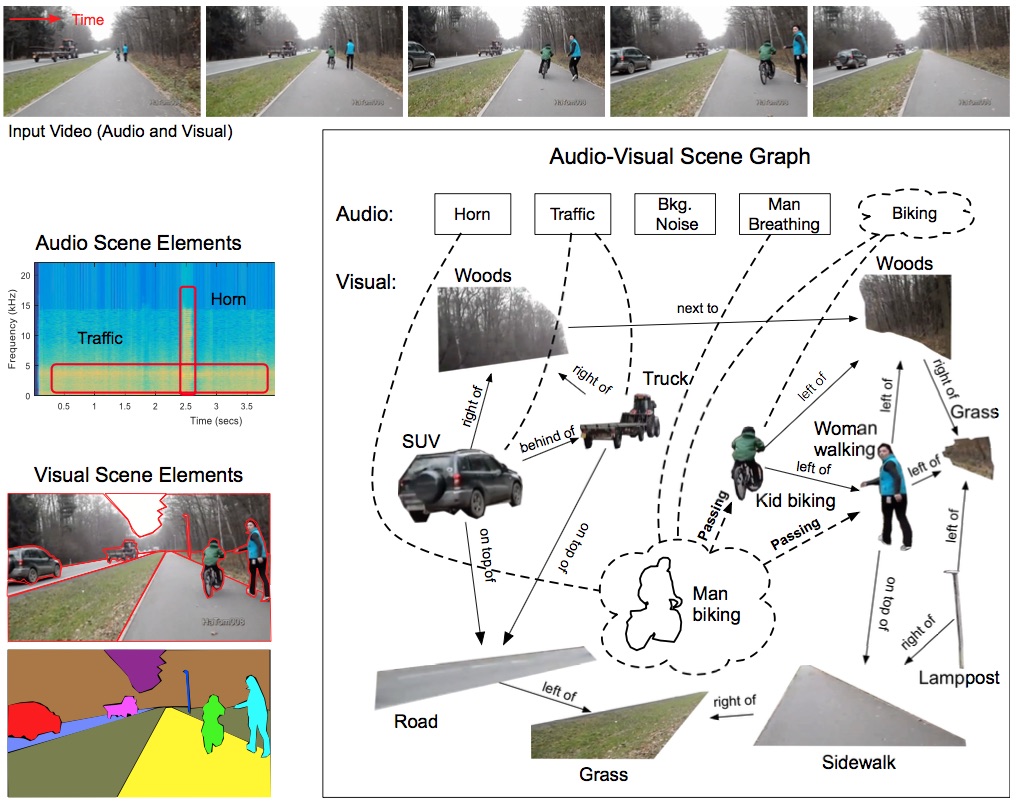

This project aims to achieve human-like audio-visual scene understanding that overcomes the limitations of single-modality approaches through big data analysis of Internet videos. The core idea is to learn to parse a scene into elements and infer their relations, i.e., forming an audio-visual scene graph. Specifically, an element of the audio-visual scene can be a joint audio-visual component of an event when the event shows correlated audio and visual features. It can also be an audio component or a visual component if the event only appears in one modality. The relations between the elements include spatial and temporal relations at a lower level, as well as correlative and causal relations at a higher level. Through this scene graph, information across the two modalities can be extracted, exchanged and interpreted. The investigators propose three main research thrusts: (1) Learning joint audio-visual representations of scene elements; (2) Learning a scene graph to organize scene elements; and (3) Cross-modality scene completion. Each of the three research thrusts explores a dimension in the space of audio-visual scene understanding, yet they are also inter-connected. For example, the audio-visual scene elements are nodes in the scene graph, and the scene graph, in turn, guides the learning of relations among scene elements with structured information; the cross-modality scene completion generates missing data in the scene graph and is necessary for good audio-visual understanding of the scene.

Current Results:

Data (), demos (), and software () are downloadable by following the individual tasks below.

Broader Impacts:

This research is of key interest to both computer vision and computer audition communities. First, the task of scene understanding is a long-lasting topic in both communities and is being tackled independently. This project ties researches in these communities together and advances both. Second, our learned bimodal representations can be used in solving other problems, in which relying on a single modality may have much difficulty. Third, our audio-visual computational models reveal interesting scientific inquires of how humans understand videos. This can lead to better design of the future AI system.

This research is intellectually transformative in advancing multi-modal modeling and robot perception. The methodologies and techniques developed in learning audio-visual bimodal representations in this project can be applied to multi-modal modeling with data from various sources such as tactile perception, GPS, radar and lasers, leading to a coherent robot perception system. One immediate application could be the perception problem in autonomous vehicles. The techniques developed in this research also enable novel applications in many other fields. These fields include multimedia (video indexing and scene editing), healthcare (assistive devices for visually and aurally impaired people), surveillance security (comprehensive monitoring of the suspicious activities), and virtual and augmented reality (generation and alternation of visuals and/or sound tracks).

- PI Xu taught a workshop related to this project to a cohort of 17 high school students through the University of Rochester's Pre-College Program in Summer 2018. He also offered lab visits with demos related to this project to students.

- Co-PI Duan taught a summer mini-course related to this project to high school students (mostly underrepresented minority students) at the Upward Bound program organized by the Kearns Center at the University of Rochester.

- The team collaborates with Prof. Ross Maddox on applying our talking face system to assist hearing impaired to better understand speech. [link]

Publications from the Team:

- Can audio-visual integration strengthen robustness under multimodal attacks? Y. Tian, and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021

- Cyclic co-learning of sounding object visual grounding and sound separation. Y. Tian, D. Hu, and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021

- Unified multisensory perception: Weakly-supervised audio-visual video parsing. Y. Tian, D. Li, and C. Xu. European Conference on Computer Vision (ECCV), 2020.

- Talking-head generation with rhythmic head motion. L. Chen, G. Cui, C. Liu, Z. Li, Z. Kou, Y. Xu, and C. Xu. European Conference on Computer Vision (ECCV), 2020.

- What comprises a good talking-head video generation? L. Chen, G. Cui, Z. Kou, H. Zheng, and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2020

- Co-learn sounding object visual grounding and visually indicated sound separation in a cycle. Y. Tian, D. Hu, and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2020.

- Deep audio prior: Learning sound source separation from a single audio mixture. Y. Tian, C. Xu, and D. Li. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPRW), 2020.

- Weakly-supervised audio-visual video parsing toward unified multisensory perception. Y. Tian, D. Li, and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2020

- End-to-end generation of talking faces from noisy speech. S. E. Eskimez, R. K. Maddox, C. Xu, and Z. Duan. International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2020

- Can multisensory training aid visual learning?: A computational investigation. R. A. Jacobs and C. Xu. Journal of Vision, 2019.

- Hierarchical cross-modal talking face generation with dynamic pixel-wise loss. L. Chen, R. Maddox, Z. Duan, and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- Audio-visual interpretable and controllable video captioning. Y. Tian, C. Guan, J. Goodman, M. Moore and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019.

- Sound to visual: Hierarchical cross-modal talking face video generation. L. Chen, H. Zheng, R. Maddox, Z. Duan and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019.

- Audio-visual event localization in the wild. Y. Tian, J. Shi, B. Li, Z. Duan and C. Xu. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019.

- Noise-resilient training method for face landmark generation from speech. S. E. Eskimez, R. K. Maddox, C. Xu, and Z. Duan. IEEE/ACM Transcations on Audio, Speech and Language Processing (TASLP), 2019

- Online audio-visual source association for chamber music performances. B. Li, K. Dinesh, C. Xu, G. Sharma, and Z. Duan. Transcations of the International Society for Music Information Retrieval, 2019.

- Creating a multi-track classical music performance dataset for multi-modal music analysis: challenges, insights, and applications. B. Li, X. Liu, K. Dinesh, Z. Duan, and G. Sharma. IEEE Transactions on Multimedia (TMM), 2019.

- Audio-visual analysis of music performances: Overview of an emerging field. Z. Duan, S. Essid, C. Liem, G. Richard, and G. Sharma. IEEE Signal Processing Magazine, 2019.

- Audio-visual deep clustering for speech separation. R. Lu, Z. Duan, and C. Zhang. IEEE/ACM Transactions on Audio Speech and Language Processing, 2019.

- Audio-visual event localization in unconstrained videos. Y. Tian, J. Shi, B. Li, Z. Duan and C. Xu. European Conference on Computer Vision (ECCV), 2018.

- Lip movements generation at a glance. L. Chen, Z. Li, R. Maddox, Z. Duan and C. Xu. European Conference on Computer Vision (ECCV), 2018.

- Generating talking face landmarks from speech. S. E. Eskimez, R. Maddox, C. Xu and Z. Duan. International Conference on Latent Variable Analysis and Signal Separation, 2018.

- Listen and look: audio-visual matching assisted speech source separation. R. Lu, Z. Duan, and C. Zhang. IEEE Signal Processing Letters, 2018.

- Skeleton plays piano: End-to-end online generation of pianist body movements from MIDI performance. B. Li, A. Maezawa, and Z. Duan. In Proc. of International Society for Music Information Retrieval Conference, 2018.

- Score-aligned polyphonic microtiming estimation. X. Wang, R. Stables, B. Li, and Z. Duan. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018.

- Deep cross-modal audio-visual generation. L. Chen, S. Srivastava, Z. Duan, and C. Xu. ACM International Conference on Multimedia Thematic Workshops (ACMMMW), 2017.

Disclaimer: Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Point of Contact: Chenliang Xu

Date of Last Update: July, 2020