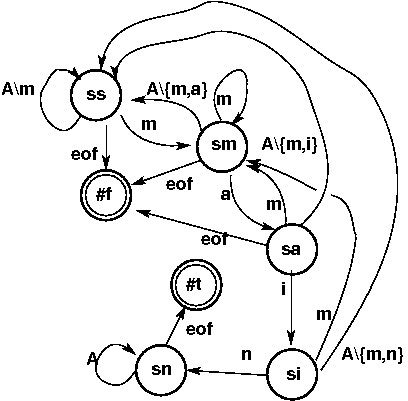

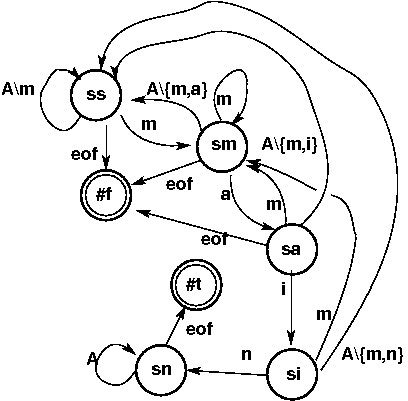

FA for Finding MAIN...diagram

This lecture is stolen from Michael Scott's Programming Language Pragmatics and Kenneth H. Rosen's Discrete Mathematics and its Applications, both on reserve in Carlson, and there should be e-reserves of the relevant bits (as of 9/12/13 we're still working on that.)

Notation varies, but here

Start in start state, if next input symbol matches label on transition from current state to new state, go to new state, repeat. If not at EOF and no moves possible, reject. If EOF then accept iffi in accepting state. (don't want to have to be at EOF? then convert your FA to one with loops on F states that gobble rest of input). Similarly can fix FA so it never gets ``stuck''. Some formulations have FA producing output or performing other actions either at each state (Moore machine) or transition (Mealy machine).

Let FA = {S,C,T,S0,F}.

S = {ss, sm, sa, si, sn}

C = {a,b,..z,A,B,..Z,0,1,..9,+,-,*,/,etc.}

F = {sn}

T = {(ss,m,sm), (ss,C-m,ss),

(sm,a,sa), (sm,m,sm), (sm,C-a-m,ss),

(sa,i,si), (sa,m,sm), (sa,C-i-m,ss),

(si,n,sn), (si,m,sm), (si,C-n-m,ss),

(sn,C,sn)}

enum {ss, sm, sa, si, sn} state = ss

char c

bool accept = false

while (c = getchar()) != EOF

switch (state)

case ss:

if c == 'm'

state = sm

case sm:

switch (c)

case 'm': ; // stay in sm

case 'a': state = sa

default : state = ss

case sa:

switch (c)

case 'm': state = sm

case 'i': state = si

default : state = ss

case si:

switch (c)

case 'm': state = sm

case 'n': state = sn

default : state = ss

case sn:

accept = true

print (accept ? "yes" : "no")

This consumes all its input but note that scanners don't: they find

longest acceptable prefix and send that to the application, expecting

to be called again for next token. Note Fortran's

DO 100 I=1,50 or

DO100I=1.50 problem or Pascal's 3.14 vs 3..14 problem.

Non-unique ``next state''.

or

More than one transition from a state given same input, or ε ``spontaneous'' transition with no input.

Acceptance if there exists a series of valid transitions that gets to accepting state. Intuitively, explore all in parallel (unlimited free parallelism) or guess correctly each time (precognition).

Implement by: keeping track of possible states after each input OR converting into a DFA (surprisingly this works!).

Simulate with input mmainm.

s0

m: s0 or s1

m: s1 rejects, again s0 or s1

a: s0 or s2

i: s0 or s3

n: s0 or s4

m: s0 or s1 or s4 -- end of input,

s4 accepts.

Automata A and B are equivalent if they accept exactly the same strings. If there is a path in A from S0 to F with sequence of labels then there's similar path in B with same labels, and vice-versa

Each DFA is an NDFA by definition, and the subset construction allows us to convert NDFA to equivalent DFA.

As in simulation, trace possible paths thru NDFA, record all poss. states we could be in from input seen so far. We make a DFA with this subset as a single state. Clearly works, but could need 2N states...

Start with previous 5-state NDFA to recognize ``main''.

Here's all 25 = 32 subsets:

{}, {s0}, {s1}, {s2}, {s3}, {s4},

{s0, s1}, {s0, s2}, {s0, s3}, {s0, s4},

{s1, s2}, {s1, s3}, {s1, s4}, {s2, s3},

{s2, s4}, {s3, s4},

{s0, s1, s2}, {s0, s1, s3}, {s0, s1, s4},

{s0, s2, s3}, {s0, s2, s4}, {s0, s3, s4},

{s1, s2, s3}, {s1, s2, s4}, {s1, s3, s4},

{s2, s3, s4},

{s0, s1, s2, s3}, {s0, s1, s2, s4},

{s0, s1, s3, s4}, {s0, s2, s3, s4},

{s1, s2, s3, s4}, {s0, s1, s2, s3, s4}

Empty state means ``completely stuck''.

Simulating:

{s0}: m → {s0,s1}

{s0}: not(m) → {s0}

{s0,s1}: m → {s0,s1}

{s0,s1}: a → {s0,s2}

{s0,s1}: not(m,a) → {s0}

{s0,s2}: m → {s0,s1}

{s0,s2}: i → {s0,s3}

{s0,s2}: not(m,i) → {s0}

{s0,s3}: m → {s0,s1}

{s0,s3}: n → {s0,s4}*

{s0,s3}: not(m,n) → {s0}

But also

{s0,s4}: m → {s0,s1,s4}*

{s0,s1,s4}: a → {s0,s2,s4}*

{s0,s2,s4:} i → {s0,s3,s4}*

{s0,s3,s4}: n → {s0,s4}*

* are final states, can be combined.

FA can only ``count'' a finite number of input scenaria -- each state can represent a counter, or a "different count": e.g. 0,1,2,3,..... Thus no FA can recognize:

Binary strings with equal number of 1's and 0's

.

Strings over '(', ')' that are parenthesis-balanced.

The pumping lemma proves these limitations. (Just a counting argument). How could you do those two tasks? (Hint: use a stack!).

FA → equivalent RE, vice-versa. Constructive proof in each direction producing FA or RE that accept the same language as the input RE or FA. The algorithm that makes FA from RE is what e.g. lex, grep do.

Alphabet = {0,1}

Language has "even number of 0s".

Start with ε, and

00

is first nontrivial case. Then stick any number of 1s in the

cracks:

1*01*01*

Can Kleene * that for as many pairs of 0's as wanted:

(1*01*01*)*

And whatever last spasm of 1's there is can also be produced by

(1*01*0)*1*,

which is maybe simpler.

Simulation leads to

Add a dead state if necessary so every state has an outgoing transition on every input symbol.

Inductively: Initially place the states of the

DFA into two equivalence classes: final states and non-final

states. Then repeatedly search for an equivalence class

In our example, the original placement puts states A, B, and E in one class (final states) and C and D in another. In all cases, a 1 leaves us in the current class, while a 0 takes us to the other class. Consequently, no class requires partitioning, and we are left with a two-state machine.

Replace each state in the input DFA by one with a corresponding RE. Eliminate states one by one, replacing them with RE describing that portion of the input string that labels the transitions into and out of the state being eliminated.

Turns out to be a dynamic programming algorithm, which means you save sub-solutions as you go along since they will be useful (possibly several times) in solving future sub-problems in the recursion. (e.g. computing Fibonacci numbers).

Details in Scott's FLAT material: e-Reserve: Programming Pragmatics (PP) Chapter 2, BB course material: reading from PP CD.

The following formalisms for string languages are equivalent: