More Sorts and some Related Topics

Linear-Time Sorts: Bucket Sort

Weiss Ch. 7.11

Extra information about (constraints on) the items to be sorted

may render the sort non-general and thereby allow quick tricks for

sorting.

Say N input items are positive integers smaller than M. Obvious idea

is M-long 'bucket' array of item counts: read thru input and update array,

O(M+N). How beat the time bound? Indexing to a bucket is making an

M-way choice, not a binary comparison.

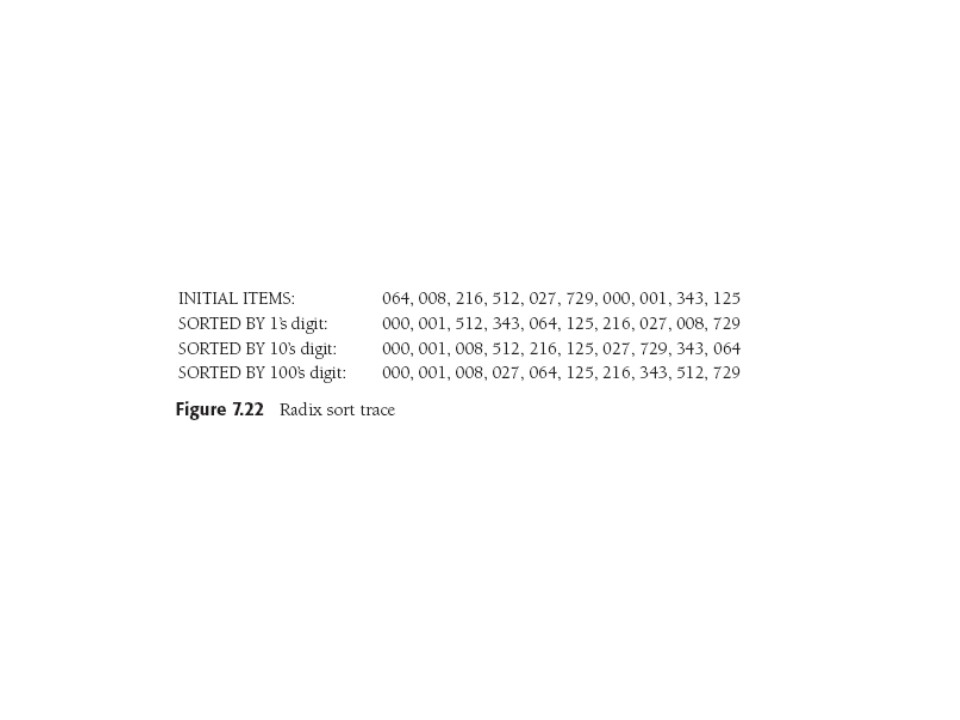

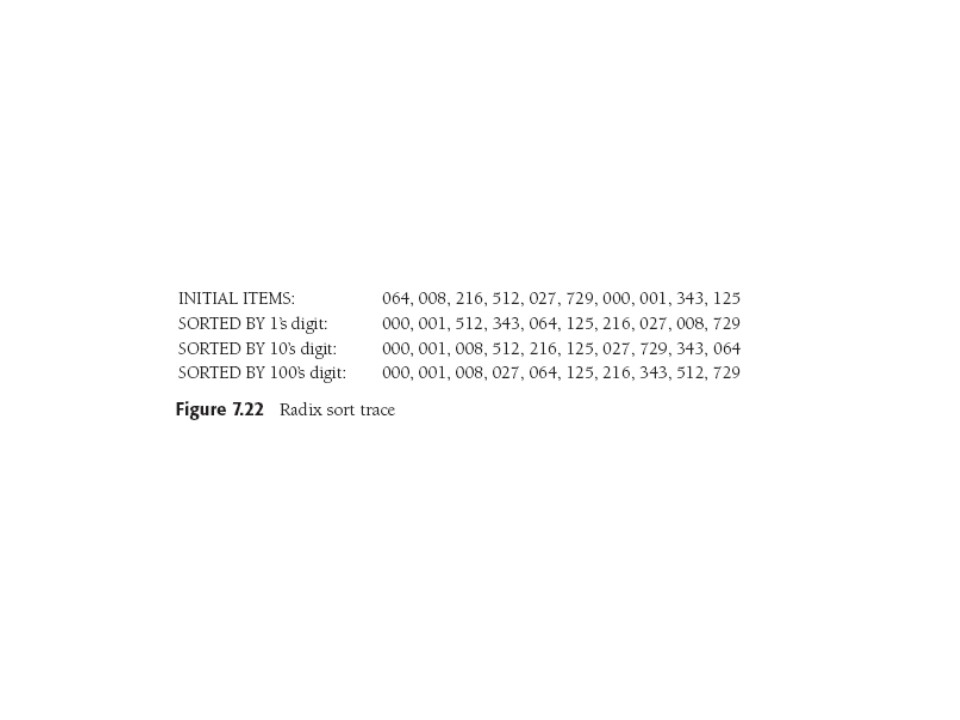

Linear-Time Sorts: Radix Sort

I remember mechanical (IBM, Hollerith, punched) card sorters.

Multi-pass, sorting on one digit of the key at a time.

Every such pass of the sort was a bucket sort into one of (let's say)

10 bins. With 4 passes you could sort 10000 cards.

Q. Which digit (low or high-order) do you want to sort first?

Notice that items that agree in the digit for the current pass retain

the ordering given them by prior passes. This is the technical

definition of a stable sort.

Without physical bins we'd use lists for the buckets. Weiss tells us

that the time is O(p(N +b)) with p the number of passes, N the item

count,

b the number of buckets. Seems to me p = logbN.

Weiss gives code for sorting strings (256 buckets for ASCII chars)

using two radix sorts, including the "counting" radix sort, which

is rather clever and minimizes temporary storage.

External Sorts

Weiss 7.12

If can't fit data into memory, access times for some items are

grotesquely

lengthened, certainly by enough to motivate new sorting techniques

that minimize disk or tape accesses. The whole analysis becomes

highly

device- and configuration-dependent.

Text's Restrictive model: tapes! items only accessible "efficiently"

(but slowly) in

sequential order (both directions). Need two tapes to

beat

O(N2), more to make it easier.

With tapes, merges are very natural, so sort uses merges as in

mergesort. Text uses four, with straightforward idea of

reading in-memory-sortable blocks of size M, sorting, and merging- writing

sorted blocks out, rinse and repeat.

With four tapes it's pretty easy to see and analyze the requisite

work, and more tapes mean multi-way merges can be done for fewer

passes thru data. The polyphase merge algorithm uses k+1 tapes to do

what it would seem needs 2k tapes. Replacement selection is a cute

way to construct the M-long sortable arrays in core memory in a smart adaptive

way that takes advantage of their shrinking as items are sorted and

merged out

to tape. (The algorithm uses a p-queue ;-} ).

Lower Bounds

Weiss 7.8 -- 7.10

Is NlogN the best we can do on sorting? Yes! Worst case and average,

as it turns out. That is:

Any sort that uses only comparisons

requires

⌈log(N!)⌉ comparisons in the worst case and

log(N!) comparisons on average.

Sorting: Information Theoretic Argument

If there are P cases to distinguish, it takes ⌈log(P)⌉

YES/NO questions in some case. Thus a binary "20 questions" could

distinguish a million cases (of course the canonical first question is

"animal, vegetable, or mineral?" as I recall, so 1.5M).

So with N! permutations, we need the log of N!, which is one of the

things that Stirling's approximation (well-known, often-used,

cf. Wikipedia)

is good for. It says:

ln N! = Nln N - N +O(ln(N)),

so there we are. If the next term in the series approximation is included, we see the

more familiar

N! ≅ √(2πN)(N/e)N.

Sorting: Decision Tree Argument

An implementation of the information theoretic argument, if you like, is to consider a

binary decision tree, with a node holding one side of the outcomes of a binary question

asked at the parent (for instance, in sorting items a,b,c, one

question might be "is a < b?").

The proof thus comes down to rather simple analysis of binary trees:

Selection: Decision-Tree Argument

Weiss 7.9 proves

three lower-bounds for selection problems, which (except for the

median) are tight (have

corresponding algorithms that take just this much time).

- N-1 comparisons are needed to find smallest item.

- N + ⌈logN⌉ -2 to find the two smallest items.

- ⌈3N/2⌉ -O(logN) to find the median.

These claims are proved by a series of easy (but for lemma 7.5, which

takes a bit of reasoning) steps. What is important is not the results

or the details, but the idea of mapping the question into a structure

(here a tree) whose properties are computable and correspond to the

answers we seek. A general, good idea (and skill).

Selection: Adversary Lower Bounds

Another basic technique for figuring out lower bounds on algorithm

complexity is to imagine an "evil demon" constructing your input.

Such arguments often provide much tighter bounds than either

information-theoretic or decision-tree arguments. In fact the

first bound (N-1 comparisons to find minimum) was really computed

using a sort of informal adversarial argument, not really decision tree argument at all.

To be rude about decision trees: Their bounds are often unhelpful,

optimistic-sounding.

There are N choices for the minimum,

so the information-theoretic bound and resulting decision tree

argument says "you'll need at least logN comparisons. (at least 20 for

a million items)" True enough, but

that is a lot sloppier and less informative bound than "you'll need N-1

(999,999 for a million items)".

In this section, just to show off the adversarial method, Weiss shows

⌈3N/2⌉ -2 comparisons are necessary to find both

largest and smallest items.

What's interesting about this section is not the above bound (cool and

all that, but...), it's the adversarial method at work that we care about.

Adversarial Method

Example: N-1 comparisons needed to find minimum.

Every element x, except the smallest, must be involved in a

comparison with some other element y, in which x > y. Else if

there were two different elts that had NOT been declared larger than

any others, then either could be the smallest.

Generally:

- Establish that some basic, minimal amount of information must be

obtained by any algorithm that solves a problem.

- In each step, the adversary can provide an input that is

consistent with all answers so far given by the algorithm.

- With insufficient steps there are multiple consistent inputs that

would provide different answers: for any answer from the algorithm the

adversary could provide a contradictory input.

Adversarial Method Example 1

N-1 comparisons needed to find minimum. Simple

adversarial strategy (Table 7.20). I'm your adversary: I make up

an input (which I can always show you and not get caught lying)

that forces N-1 questions.

U is unknown, E is eliminated, a-e are my numbers, 0,1,2...the values

I give them (dynamically, during questioning, and invisible to you!).

INFO means you learn something (one number is <

another),

NOINFO means you don't.

a b c d e

U U U U U

0 0 0 0 0 (my current set of values)

You: a < b? Me: yep! (U-U) INFO

a b c d e

U E U U U (b can't be smallest)

0 1 0 0 0 (curr vals -

consistent w/ ?s)

d < e? Yes (U-U) INFO

a b c d e

U E U U E (e can't be smallest)

0 1 0 0 2

b < e? Yes! (true. (E-E) NOINFO

a < e? Yes! (true --so I

don't eliminate) (U-E) NOINFO

...

Need to eliminate N-1 numbers, so need N-1 (U-U) questions.

Adversarial Method Example 2

Finding minimum and maximum... harder!

To prove

⌈3N/2⌉ -2 comparisons are necessary to find both

largest and smallest items

we must produce aa rather impressive table of the adversary's fiendish input

construction strategy. Not immediately obvious, need to read p. 309,

but idea is for adversary not to give out more than one unit of

information per question. (e.g. if you ask if x>y for a new x and y,

the answer tells you that one is not the minimum and one is not the

maximum...

two units of info. Adversary must minimize that sort of thing...

Last update: 10/23/13