|

Blackboard

|

|

Your task in this assignment is to parallelize an existing sequential program, written in Java, that constructs a (Euclidean) minimum spanning tree (MST) for a collection of points in the plane.

As you may recall from a data structures or algorithms class, there are well-known MST algorithms that run in time O(n2), where n is the number of nodes, and others that run in time O(m log n), where m is the number of edges. The latter is better, of course, if and only if m << n2/log n. For points in the plane, with Euclidean distance and no explicitly specified edges, one might be inclined to assume that O(n2) is the best we can do, but this is not the case. It can be proven that the edges of the MST must be a subset of the edges in the Delaunay triangulation of the given points.

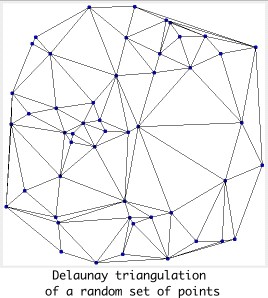

A triangulation of points in the plane is a maximal set of line

segments whose endpoints are among the given points and which do not

otherwise intersect. If one were to stretch a

rubber band around the given points, a triangulation divides the

interior space into triangles; hence

the name.

The Delaunay triangulation (example at left) has the property

that the circumcircle of the vertices of a triangle (the unique

circle on which all three vertices lie) contains no other point in the

set. One can prove that the Delaunay triangulation of a set is unique

if no four points lie on the same circle and no three points lie on the

same line. One can also prove that the

Delaunay triangulation maximizes the minimum corner angle across all

triangles. Delaunay triangulations tend to be pleasing to the eye.

They are used in graphics rendering and mechanical simulation.

They are also related in interesting ways to the notions of convex

hull and Voronoi

diagram.

Note that the number of edges in a triangulation is O(n).

A triangulation of points in the plane is a maximal set of line

segments whose endpoints are among the given points and which do not

otherwise intersect. If one were to stretch a

rubber band around the given points, a triangulation divides the

interior space into triangles; hence

the name.

The Delaunay triangulation (example at left) has the property

that the circumcircle of the vertices of a triangle (the unique

circle on which all three vertices lie) contains no other point in the

set. One can prove that the Delaunay triangulation of a set is unique

if no four points lie on the same circle and no three points lie on the

same line. One can also prove that the

Delaunay triangulation maximizes the minimum corner angle across all

triangles. Delaunay triangulations tend to be pleasing to the eye.

They are used in graphics rendering and mechanical simulation.

They are also related in interesting ways to the notions of convex

hull and Voronoi

diagram.

Note that the number of edges in a triangulation is O(n).

The program we are giving you includes an implementation of Dwyer’s Delaunay triangulation algorithm (a refinement of the earlier algorithm of Guibas & Stolfi), which runs in time O(n log n). Using the resulting mesh, the program then runs Kruskal’s algorithm to create the MST. Kruskal’s algorithm, given the linear number of edges, is also O(n log n), but the constant is much smaller: in the sequential program, creation of the mesh takes almost 95% of the total run time.

The program is designed to be run from the command line, where you can specify various start-up parameters (see below). In interactive modes, it opens a square display containing N blue dots (nodes), and a series of control buttons.

Source code is in the files

MST.java and

Coordinator.java,

which you can view in, and save from, your browser.

Executable code lies in 30-some .class files, many of which

are for the user interface. They are generated by running

the source files through the Java compiler, javac.

You will be running this assignment on

node2x14a.csug.rochester.edu and

node2x18a.csug.rochester.edu.

Each of these machines has two processor chips. The smaller

machine has 14 cores per chip, the larger 18 cores per chip. Each

core has 2 hardware contexts (hyperthreads). This means

the machines can execute up to 56 or 72 threads in

parallel. You will probably find that your code runs faster with 2, 4, or

even 8 threads, but probably slows down again before it gets to 32, due to

thread creation overhead, lack of available concurrency, and/or bus,

memory, or ALU contention.

Note that node2x14a

and node2x18a

are visible only inside the

csug firewall.

Only cycle1, cycle2, and cycle3

are visible from outside.

Thus to run timing experiments from outside the firewall you must first

ssh to one of the cycle machines and

then ssh from there to

node2x14a

or node2x18a.

As the due date approaches, we will reserve much of the time on

node2x18a for timing experiments, with a sign-up system that

allows you to obtain exclusive access to the machine

(node2x14a will remain available for development).

Note that you

will almost certainly not be able to get last-minute exclusive access, and

since results of timing experiments are required for full credit on the

assignment, you will need to plan to have your code ready for testing

ahead of the due date.

The code we are giving you accepts four command-line arguments:

You can run the application remotely in animation modes 2 and 3, with X11

forwarding over ssh, but it will be choppy. You will

probably get better results with -Y (insecure) forwarding

rather than -X.

You will want to use mode 0 for timing tests—otherwise the program

will spend all its time generating output, and you won’t really

be measuring anything of interest.

You are welcome to do development on a personal machine, but

while Java implementations are very portable, you will need to

test your code and collect performance results at csug.

You’ll almost certainly want to use a 64-bit Java installation;

otherwise you’ll run out of

heap space with more than about 70,000 points, and a problem of that

size can be solved on a single core of a modern laptop in about a second.

The installation on node2x14a

and node2x18a runs in 64-bit mode.

A 1,000,000-point run on a single core of

node2x14a takes about 12 seconds.

The write-up requirements for this assignment are more extensive than they

have been for past assignments.

In addition to parallelizing the code and describing what you did, you

must evaluate the success of your parallelization. Using

node2x18a, for some convenient number of points, create a graph

that plots execution time as a function of the number of threads, varying

that number from 1 to 64.

(You do not necessarily have to plot every possible thread

count—that would take a lot of experimentation time. Thread

counts of, say, 1, 2, 3, 4, 6, 8, 12, 16, 24, 32, 48, and 64 should

suffice.)

Also plot the speedup of your

code: the run time of the original (unmodified!) sequential version

divided by the run time of your parallel version. Ideally,

you’d see a speedup of k with k threads. How

close do you come? What bottleneck(s) keep you from doing

better?

As in previous assignments, you may work alone or in teams of two. If you choose to work in pairs, a natural division of labor is for one partner to parallelize the Dwyer (triangulation) stage of the program and the other to parallelize the Kruskal (MST) stage. You’ll find that the Dwyer code is much more complicated, but it has a natural divide-and-conquer parallelization. The Kruskal code is much simpler—you’ll find it easy to understand—but parallelization is more challenging. The easiest strategy is probably to retain the serial iteration over edges, allow threads to identify the subtrees they want to merge (an O(log n) operation) in parallel, and then force the merges to actually complete in order (possibly starting over if they discover that a previous merge has changed which edges are in which subtrees). You may discover, however, that the condition synchronization for this strategy consumes more time than it saves; you’ll want to address this in your write-up.

I’ve coded up a solution along the lines described in the previous paragraph. If you’d like to try it out, run

java -jar /u/cs254/bin/MST.jar <command-line arguments>

Be sure to follow all the rules on the Grading page. As with all assignments,

use the turn-in script:

~cs254/bin/TURN_IN. Put your write-up in a

README.pdf file in the directory in

which you run the script. Be sure to describe any

features of your code that the TAs might not immediately notice.

By 11:59pm on Sunday, Apr. 13, each student should complete the T4 trivia assignment found on Blackboard.